Difference between revisions of "AMI Historical Database"

| Line 177: | Line 177: | ||

If we diagnose the table, we can see that once again, the AMIHDB is assuming the worst case | If we diagnose the table, we can see that once again, the AMIHDB is assuming the worst case | ||

scenario for the Integer column(FLAT) if you don’t declare the storage type for this column.<br> | scenario for the Integer column(FLAT) if you don’t declare the storage type for this column.<br> | ||

| − | <syntaxhighlight lang="amiscript">CREATE PUBLIC table FlatExample(id int, id2 int FLAT,id3 int | + | <syntaxhighlight lang="amiscript">CREATE PUBLIC table FlatExample(id int, id2 int FLAT,id3 int FLAT_NOMIN, id4 int FLAT_NONULL) USE PersistEngine="HISTORICAL"; |

| − | FLAT_NOMIN, id4 int FLAT_NONULL) USE PersistEngine="HISTORICAL"; | ||

Insert into FlatExample values(1,23,NULL,-2147483648); | Insert into FlatExample values(1,23,NULL,-2147483648); | ||

Diagnose table FlatExample;</syntaxhighlight> | Diagnose table FlatExample;</syntaxhighlight> | ||

Revision as of 08:49, 30 May 2023

Introduction to AMI Historical Database

The AMI Historical Table is a columnar-based on-disk table engine with support for partitioning. The introduction section will briefly describe the core concepts of the AMI Historical database: Partitioning, Data storage and optimizations.

Partitioning

Partitioning is used to organize data for performance. To understand why partitioning is critical

for large datasets, let’s first consider a non-partitioned standard table: as the number or rows

increases, the performance (time to insert, update, query and alter) will decline. Partitioning

provides an elegant solution by breaking up rows into separate isolated “virtual tables” called

partitions. Once data is broken into partitions, the row count of one partition will not affect the

performance of other partitions. In order to dictate how rows are organized into partitions, the

AMI Historical Table introduces a special PARTITION column type. The value in the PARTITION

column(s) determines which partition a given row will be assigned to. In other words, all rows for

a given partition will have the same value in the PARTITION column(s).

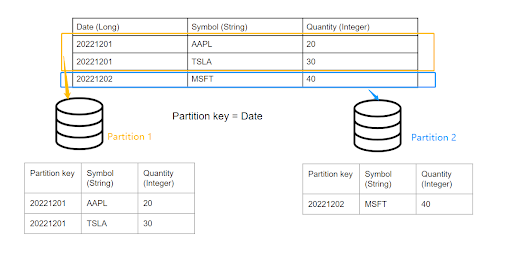

By way of example, data is often partitioned by a date: Imagine we have the following historical

table, partitioned by the column “date”

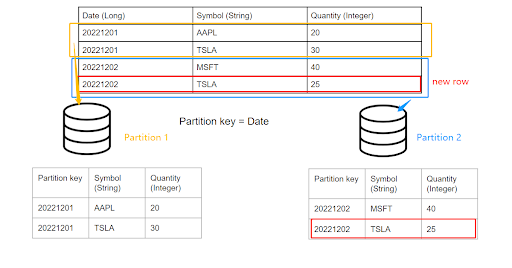

When inserting row(s) whose date match(es) the existing partition key(s), the query engine will automatically navigate to the corresponding partition according to the partition key and append the rows accordingly.

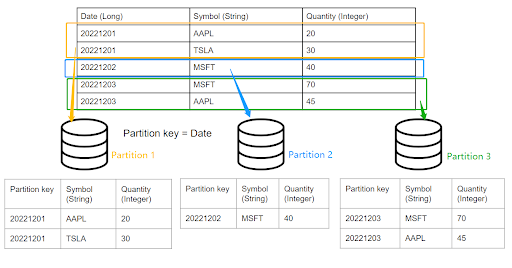

Note that adding another day of data will automatically create a new partition without affecting

existing days’ data: For example, if we want to insert two new rows into the table whose “Date”

== 20221203

To create a historical table add USE PersistEngine="HISTORICAL" and include the PARTITION keyword after the column(s) that the rows should be partitioned by. Ex:

CREATE PUBLIC TABLE PartitionDemo(date LONG PARTITION, symbol STRING, qty INTEGER, comments STRING) use PersistEngine="HISTORICAL";

The file structure of AMIHDB is such that each table has its own directory and each partition is

assigned its own subdirectory. Each column and index has its own file(s) per partition directory.

<amiroot>/hdb/Table_Name.3fhtab/Partition_Id.dfhpar/<Files within a partition>

- ● Use ami.hdb.root.dir property to override location of hdb root

- ● Use ami.hdb.filehandles.max property to control how many files can be open at a time

- ● Table_Name - is the name of the table

- ● Partition_Id - An auto-incrementing id (starting at 0001)

- ● Files within a partition:

- ○ KEY.3fhkey - This stores the (plaintext) key for this partition

- ○ ColumnName.3fhcol - Stores data for the column

- ○ IndexName.3fhidx - Stores index data

- ○ KEY.3fhkey - This stores the (plaintext) key for this partition

This paradigm is designed for fast bulk INSERTs, fast random SELECTs and fast DELETES of

entire partitions. UPDATES, and sparse DELETES are possible but will operate slower

Optimized for Schema Alterations

Each partition is a self-contained set of data. This means that altering a schema does not

impact the way existing partitions are saved on disk. Instead, AMIHDB will map the “old”

partitions using the “old” schema to the current schema definition at query time. This applies for

ADD, DROP and MODIFY of columns.

Important Note: The PARTITION columns are the only columns that can not be altered

after the table is created.

Data storage types and optimizations

The AMI historical database has different data storage types designed for data of various

natures and characteristics, such as length, cardinality and partition keys. While continuously

inserting, the nature of the data in the table is unpredictable so a more flexible but less efficient

strategy is used for storage. Therefore, after the partition is completed, it is important to optimize

the storage strategy to adapt to the existing data to improve query performance and minimize

disk storage. Data storage types and optimizations will be further discussed in Section 2: Data

types and storage types, and Section 4.1:Optimization on existing data.

PARTITION MODES

As a historical database, it is important to have a mode for fast insert-on-demand data. In

AMIHDB, when a new partition is created it will have a status of

IN_APPEND_MODE/NOT_OPTIMIZED. Then, after that table is optimized, the partition will

become optimized for data compression and query times.

There are two ways to force optimize partitions:

(a). By calling CALL __OPTIMIZE_HISTORICIAL_TABLE(“Your_Historical_Table_Name”)

(b). By restarting AMI Center

You can use the DIAGNOSE TABLE <Your_Hitorical_Table_Name> command to view the optimized status of each partition:

Before commit:

![]()

After commit:

![]()

Data Types and Storage Type

AMIHDB supports the same data types as the AMI Real-time database:

BYTE, SHORT, INT, LONG, FLOAT, DOUBLE, CHAR, UTC, UTCN, UUID, COMPLEX, BINARY,

STRING, BIGINT, BIGDEC

Each data type has several storage types each suited to optimize storage/retrieval depending

on the cardinality and/or length of data. In AMIHDB, there are four storage types:

FLAT, BITMAP, VARSIZE, PARTITION.

Important Note: Different partitions can have different storage types for the same column.

VARSIZE

VARSIZE is the storage engine for variable-length data types:

STRING,BINARY,BIGINT,BIGDEC

There are three varieties of VARSIZE:

VARSIZE3,VARSIZE4,VARSIZE5 (default is VARSIZE5)

The number at the end indicates the number of bytes of the address pointer. For example,

VARSIZE3 indicates that the pointer has an address size of 3 Bytes, meaning that it can

address up to approximately 16 Megabytes of data (256*256*256).

Here are is the amount of total data that a column can hold depending on the type:

| VARSIZE() | Size |

|---|---|

| VARSIZE3 | 16MB |

| VARSIZE4 | 4GB |

| VARSIZE5 | 1TB |

Note, that if data exceeds the size of a column type, AMIHDB will automatically reconfigure and

extend the VARSIZE type (Note: this will be an expensive operation though). AMIHDB will

default to VARSIZE5, if not explicitly specified in the CREATE TABLE schema.

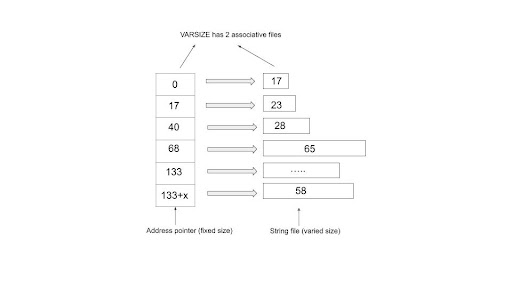

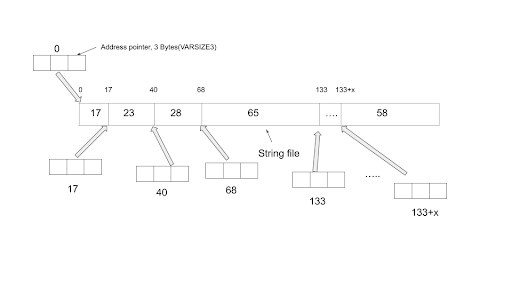

The way AMIHDB stores a variable-length value is to keep an address pointer to point to the

offset position relative to the first string to store each individual new string. As the diagram below

shows, each time we are adding a new string, we are appending it to the end of the preceding

string in the string file. In the example below, each number in the string file block indicates the

number of bytes each string occupies. The first address pointer points to the first string of 17

Bytes long and since there is no preceding string, the offset value the pointer stores is 0. When

we try to add the second string of length 23 Bytes long, we use the second address pointer,

which points to the offset distance relative to the first string, which is 17, to point to the second

string, so on and so forth.

As the diagram below shows, AMIHDB needs 2 files to store the String VARSIZE column. One

file (<column_name>.3fhcol) is the array of offset values for each address pointer and the other

file (<column_name>.3fhdat) stores the actual string payload.

VARSIZE Example

Let’s create a historical table which contains VARSIZE storage types

CREATE PUBLIC table VARSExample(AllNames String, ShortNames String VARSIZE3, MediumNames String VARSIZE4, LongNames String VARSIZE5) USE PersistEngine="HISTORICAL";

Insert into VARSExample values("All the names","abc","abcdef","abcdefghijklmnopqrstuvwxyz");

If we diagnose the table, we can see detailed information for each of the string columns.

Diagnose table VARSExample;

We can see that the AMIHDB by default assigns the string variable to VARSIZE5. So if you are

not declaring a column as " ColumnName String VARSIZE", AMIHDB will assume the

worst case scenario by allocating the maximum memory to the string column (AKA VARSIZE5).

FLAT

FLAT is the storage type for data with a known fixed length. Data types that use FLAT are:

BYTE,SHORT,INT,LONG,FLOAT,DOUBLE,CHAR,UTC,UTCN,UUID,COMPLEX,BOOLEAN

There are three varieties of FLAT:

FLAT, FLAT_NONULL, FLAT_NOMIN (default is FLAT)

- FLAT stores all values and null but uses an extra byte.

- FLAT_NONULL can store all values except null.

- FLAT_NOMIN cat store all values except min value and null

- Note, that if an entry is inserted that does not fit in the column type (for example, inserting null

into a FLAT_NONULL column) AMIHDB will automatically reconfigure and extend the type so the entry will fit. AMIHDB will default to FLAT if not explicitly specified in the CREATE TABLE schema.

Here is an example of storage cost per row for INTEGER, which is 4 bytes:

- INTEGER FLAT: 5 bytes in total, which consists of 4 bytes of data + 1 byte for NULL flag.

- INTEGER FLAT_NONULL: 4 bytes in total (since there is no NULL flag to store)

- INTEGER FLAT_NOMIN: 4 bytes of data in total (the minimum value of that data type is

reserved as a placeholder for null hence its not available)

FLAT Example

First let’s create a historical table with the different FLAT types and insert some rows into it.

If we diagnose the table, we can see that once again, the AMIHDB is assuming the worst case

scenario for the Integer column(FLAT) if you don’t declare the storage type for this column.

CREATE PUBLIC table FlatExample(id int, id2 int FLAT,id3 int FLAT_NOMIN, id4 int FLAT_NONULL) USE PersistEngine="HISTORICAL";

Insert into FlatExample values(1,23,NULL,-2147483648);

Diagnose table FlatExample;