Difference between revisions of "Datasource Adapters"

Tag: visualeditor-switched |

|||

| (244 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| + | |||

= Flat File Reader = | = Flat File Reader = | ||

| Line 39: | Line 40: | ||

_fields="String account, Integer qty, Double px" | _fields="String account, Integer qty, Double px" | ||

_pattern="account,qty,px=Account (.*) has (.*) shares at \\$(.*) px" | _pattern="account,qty,px=Account (.*) has (.*) shares at \\$(.*) px" | ||

| − | EXECUTE SELECT * FROM | + | EXECUTE SELECT * FROM file |

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 50: | Line 51: | ||

1. Open the '''datamodeler''' (In Developer Mode -> Menu Bar -> Dashboard -> Datamodel) | 1. Open the '''datamodeler''' (In Developer Mode -> Menu Bar -> Dashboard -> Datamodel) | ||

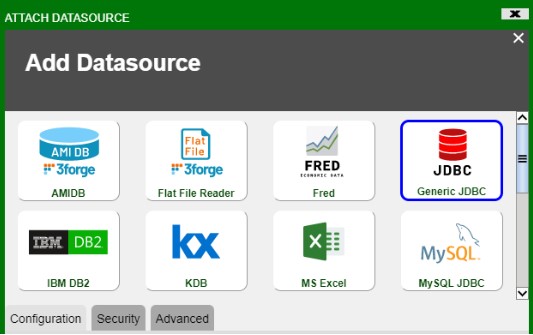

| − | 2. Choose the "''' | + | 2. Choose the "'''Attach Datasource'''" button |

3. Choose '''Flat File Reader''' | 3. Choose '''Flat File Reader''' | ||

| Line 69: | Line 70: | ||

=== File name Directive (Required) === | === File name Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_file=''path/to/file''</span> | ||

| − | + | '''Overview''' | |

| − | |||

| − | |||

This directive controls the location of the file to read, relative to the datasource's url. Use the forward slash (/) to indicate directories (standard UNIX convention) | This directive controls the location of the file to read, relative to the datasource's url. Use the forward slash (/) to indicate directories (standard UNIX convention) | ||

| − | + | '''Examples''' | |

| − | _file="data.txt" (Read the ''data.txt'' file, located at the root of the datasource's url) | + | |

| + | <span style="font-family: Courier New; color: blue;">_file="data.txt"</span> (Read the ''data.txt'' file, located at the root of the datasource's url) | ||

| − | _file="subdir/data.txt" (Read the ''data.txt'' file, found under the ''subdir'' directory) | + | <span style="font-family: Courier New; color: blue;">_file="subdir/data.txt"</span> (Read the ''data.txt'' file, found under the ''subdir'' directory) |

=== Field definitions Directive (Required) === | === Field definitions Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col_name, col2_type col2_name, ...''</span> | ||

| − | + | '''Overview''' | |

| − | |||

| − | |||

This directive controls the Column names that will be returned, along with their types. The order in which they are defined is the same as the order in which they are returned. If the column type is not supplied, the default is String. Special note on additional columns: If the line number (see _''linenum'' directive) column is not supplied in the list, it will default to type integer and be added to the end of the table schema. Columns defined in the Pattern (see _''pattern'' directive) but not defined in _''fields'' will be added to the end of the table schema. | This directive controls the Column names that will be returned, along with their types. The order in which they are defined is the same as the order in which they are returned. If the column type is not supplied, the default is String. Special note on additional columns: If the line number (see _''linenum'' directive) column is not supplied in the list, it will default to type integer and be added to the end of the table schema. Columns defined in the Pattern (see _''pattern'' directive) but not defined in _''fields'' will be added to the end of the table schema. | ||

| − | Types should be one of: ''String, Long, Integer, Boolean, Double, Float, UTC'' | + | Types should be one of: <span style="font-family: Courier New; color: blue;">''String, Long, Integer, Boolean, Double, Float, UTC''</span> |

Column names must be valid variable names. | Column names must be valid variable names. | ||

| − | + | '''Examples''' | |

| − | |||

| − | _fields ="fname,lname,int age" (define 3 columns, ''fname'' and ''lname'' default to String) | + | <span style="font-family: Courier New; color: blue;">_fields="String account,Long quantity"</span> (define two columns) |

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields ="fname,lname,int age"</span> (define 3 columns, ''fname'' and ''lname'' default to String) | ||

== Directives for parsing Delimited list of ordered Fields == | == Directives for parsing Delimited list of ordered Fields == | ||

| − | _file=''file_name'' ('''Required''', see general directives) | + | <span style="font-family: Courier New; color: blue;">_file=''file_name''</span> ('''Required''', see general directives) |

| − | _fields=''col1_type col1_name, ...'' ('''Required''', see general directives) | + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) |

| − | _delim=delim_string ('''Required''') | + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') |

| − | _conflateDelim=true|false (Optional. Default is false) | + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) |

| − | ''_quote=single_quote_char'' (Optional) | + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) |

| − | ''_escape=single_escape_char'' (Optional) | + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) |

The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _e''scape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _e''scape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| − | + | '''Examples''' | |

| − | _delim="|" | + | |

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| − | _fields="code,lname,int age" | + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> |

| − | _quote="'" | + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> |

| − | _escape="\\" | + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> |

This defines a pattern such that: | This defines a pattern such that: | ||

| Line 150: | Line 156: | ||

== Directives for parsing Key Value Pairs == | == Directives for parsing Key Value Pairs == | ||

| − | _file=''file_name'' ('''Required''', see general directives) | + | <span style="font-family: Courier New; color: blue;">_file=''file_name''</span> ('''Required''', see general directives) |

| − | _fields=''col1_type col1_name, ...'' ('''Required''', see general directives) | + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) |

| − | _delim=delim_string ('''Required''') | + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') |

| − | _conflateDelim=true|false (Optional. Default is false) | + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) |

| − | ''_equals=single_equals_char'' ('''Required''') | + | <span style="font-family: Courier New; color: blue;">''_equals=single_equals_char''</span> ('''Required''') |

| − | _mappings=from1=to1,from2=to2,... (Optional) | + | <span style="font-family: Courier New; color: blue;">_mappings=from1=to1,from2=to2,...</span> (Optional) |

| − | ''_quote=single_quote_char'' (Optional) | + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) |

| − | ''_escape=single_escape_char'' (Optional) | + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) |

The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _equals char is used to indicate the key/value separator. The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _''escape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _equals char is used to indicate the key/value separator. The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _''escape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| Line 170: | Line 176: | ||

The optional _m''appings'' directive allows you to map keys within the flat file to file names specified in the _''fields'' directive. This is useful when a file has key names that are not valid field names, or a file has multiple key names that should be used to populate the same column. | The optional _m''appings'' directive allows you to map keys within the flat file to file names specified in the _''fields'' directive. This is useful when a file has key names that are not valid field names, or a file has multiple key names that should be used to populate the same column. | ||

| − | === Examples === | + | '''Examples''' |

| − | _delim="|" | + | |

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_equals="="</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_mappings="20=code,21=lname,22=age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''code=11232-33|lname=Smith|age=20'' | ||

| + | |||

| + | ''code='1332|ABC'|age=30'' | ||

| + | |||

| + | ''20=Act\|112|21=J|22=18 (Note: this row will work due to the _mappings directive)'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !code | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |11232-33 | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |<nowiki>1332|ABC</nowiki> | ||

| + | | | ||

| + | |30 | ||

| + | |- | ||

| + | |<nowiki>Act|112</nowiki> | ||

| + | |J | ||

| + | |18 | ||

| + | |} | ||

| + | |||

| + | == Directives for Pattern Capture == | ||

| + | <span style="font-family: Courier New; color: blue;">_file=''file_name''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> (Optional, see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_''pattern''=''col1_type col1_name, ...=regex_with_grouping''</span> ('''Required''') | ||

| + | |||

| + | The ''_pattern'' must start with a list of column names, followed by an equal sign (=) and then a regular expression with grouping (this is dubbed a column-to-pattern mapping). The regular expression's first grouping value will be mapped to the first column, 2<sup>nd</sup> grouping to the second and so on. | ||

| + | |||

| + | If a column is already defined in the _fields directive, then it's preferred to not include the column type in the ''_pattern'' definition. | ||

| + | |||

| + | For multiple column-to-pattern mappings, use the \n (new line) to separate each one. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''User John Smith is 20 years old'' | ||

| + | |||

| + | ''User Bobby Boy is 30 years old'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !fname | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |John | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |Bobby | ||

| + | |Boy | ||

| + | |30 | ||

| + | |} | ||

| + | <span style="font-family: Courier New; color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old\n lname,fname,int weight=Customer (.*),(.*) weighs (.*) pounds"</span> | ||

| + | |||

| + | This defines two patterns such that: | ||

| + | |||

| + | ''User John Smith is 20 years old'' | ||

| + | |||

| + | ''User Boy,Bobby weighs 130 pounds''' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !fname | ||

| + | !lname | ||

| + | !age | ||

| + | !weight | ||

| + | |- | ||

| + | |John | ||

| + | |Smith | ||

| + | |20 | ||

| + | | | ||

| + | |- | ||

| + | |Bobby | ||

| + | |Boy | ||

| + | | | ||

| + | |130 | ||

| + | |} | ||

| + | |||

| + | == Optional Line Number Directives == | ||

| + | |||

| + | === Skipping Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines=''number_of_lines''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the number of lines to skip from the top of the file. This is useful for ignoring "junk" at the top of a file. If not supplied, then no lines are skipped. From a performance standpoint, skipping lines is highly efficient. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="0"</span> (this is the default, don't skip any lines) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="1"</span> (skip the first line, for example if there is a header) | ||

| + | |||

| + | === Line Number Column Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=''column_name''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the name of the column that will contain the line number. If not supplied, the default is "linenum". Notes about the line number: The first line is line number 1, and skipped/filtered out lines are still considered in numbering. For example, if the _''skipLines''=2 , then the first line will have a line number of 3. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=""</span> (A line number column is not included in the table) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="linenum"</span> (The column ''linenum'' will contain line numbers, this is the default) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="rownum"</span> (The column ''rownum'' will contain line numbers) | ||

| + | |||

| + | == Optional Line Filtering Directives == | ||

| + | |||

| + | === Filtering Out Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Any line that matches the supplied regular expression will be ignored. If not supplied, then no lines are filtered out. From a Performance standpoint, this is applied before other parsing is considered, so ignoring lines using a filter out directive is faster, as opposed to using a WHERE clause, for example. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="Test"</span> (ignore any lines containing the text ''Test'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="^Comment"</span> (ignore any lines starting with ''Comment'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="This|That"</span> (ignore any lines containing the text ''This'' or ''That'') | ||

| + | |||

| + | === Filtering In Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Only lines that match the supplied regular expression will be considered. If not supplied, then all lines are considered. From a Performance standpoint, this is applied before other parsing is considered, so narrowing down the lines considered using a filter in directive is faster, as opposed to using a WHERE clause, for example. If you use a grouping (..) inside the regular expression, then only the contents of the first grouping will be considered for parsing | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="3Forge"</span> (ignore any lines that don't contain the word ''3Forge'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="^Outgoing"</span> (ignore any lines that don't start with ''Outgoing'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="Data(.*)"</span> (ignore any lines that don't start with ''Data,'' and only consider the text after the word ''Data'' for processing) | ||

| + | |||

| + | |||

| + | === Python Adapter Guide === | ||

| + | 1. Introduction | ||

| + | The python adapter is a library which provides access to both the console port as well as real-time port on python scripts via sockets.<br> | ||

| + | The adapter is meant to be integrated with external python libraries and does not contain a __main__ entry point. To use the simple python demo, switch your branch to example and run demo.py.<br> | ||

| + | The adapter has a few default arguments which should work with AMI out of the box but can be customized depending on the input arguments. To view the full set of arguments, run the program with the --help argument.<br> | ||

| + | |||

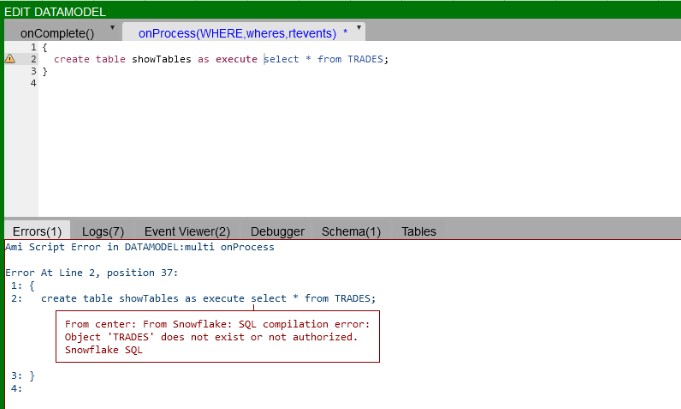

| + | = MongoDB adapter = | ||

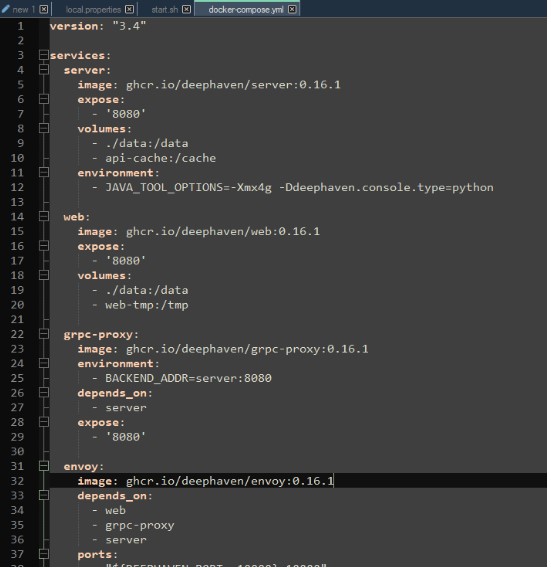

| + | 1. Setup <br> | ||

| + | (a). Go to your lib directory (located at '''./amione/lib/''') and take the ami_adapter_mongo.9593.dev.obv.tar.gz and copy the contents into the lib directory of your currently installed AMI. Make sure that you unzip the file package into multiple files ending with .jar.<br> | ||

| + | [[File:Mongo Adapter1.png]]<br> | ||

| + | |||

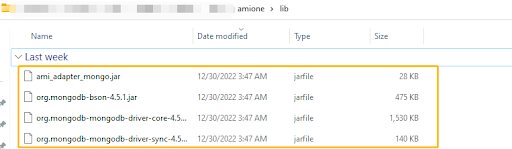

| + | (b). Go into your config directory (located at '''ami\amione\config''') and edit or make a '''local.properties'''<br> | ||

| + | Search for '''ami.datasource.plugins''', add the Mongo Plugin to the list of datasource plugins:<br> | ||

| + | <pre>ami.datasource.plugins=$${ami.datasource.plugins},com.f1.ami.plugins.mongo.AmiMongoDatasourcePlugin</pre> | ||

| + | Here is an example of what it might look like:<br> | ||

| + | [[File:Mongo Adapter2.png]] <br> | ||

| + | Note: '''$${ami.datasource.plugins}''' references the existing plugin list. Do not put a space before or after the comma.<br> | ||

| + | |||

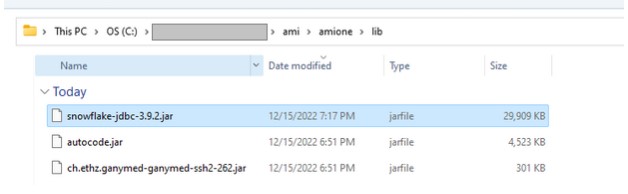

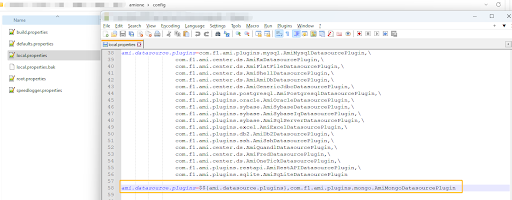

| + | (c). Restart AMI<br> | ||

| + | (d). Go to '''Dashboard->Data modeller''' and select '''Attach Datasource'''. <br> | ||

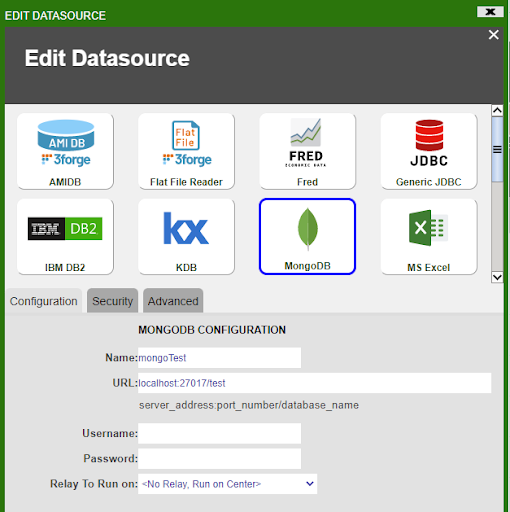

| + | [[File:Mongo Adapter3.png]]<br> | ||

| + | (e). Select MongoDB as the Datasource. Give your Datasource a name and configure the URL. | ||

| + | The URL should take the following format:<br> | ||

| + | <pre>URL: server_address:port_number/Your_Database_Name </pre> | ||

| + | |||

| + | In the demonstration below, the URL is: '''localhost:27017/test'''. The mongoDB by default listens to the port 27017 and we are going to the ''test'' database.<br> | ||

| + | [[File:Mongo Adapter4.png]]<br> | ||

| + | |||

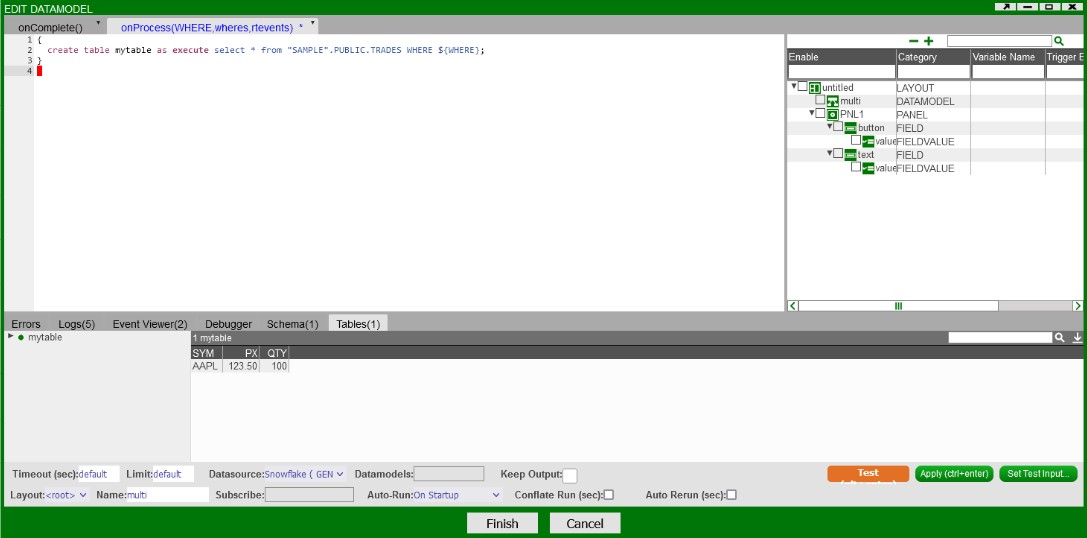

| + | 2. Send queries to MongoDB in AMI <br> | ||

| + | The AMI MongoDB adapter allows you to query a MongoDB datasource and output sql tables. | ||

| + | This section will demonstrate how to query MongoDB in AMI. The general syntax for querying MongoDB is:<br> | ||

| + | |||

| + | <syntaxhighlight lang="sql" > CREATE TABLE Your_Table_Name AS USE EXECUTE <Your_MongoDB_query> </syntaxhighlight> <br> | ||

| + | Note that whatever comes after the keyword '''EXECUTE''' is the MongoDB query, which should follow the MongoDB query syntax. | ||

| + | |||

| + | (a). Create a AMI SQL table from a MongoDB collection <br> | ||

| + | <span class="nowrap"> </span> (i). MongoDB collection example1<br> | ||

| + | In the MongoDB shell, let’s create a collection called “customer”, and insert some rows into it.<br> | ||

| + | <syntaxhighlight lang="sql" >db.createCollection("zipcodes"); | ||

| + | db.zipcodes.insert({id:01001,city:'AGAWAM', loc:[-72.622,42.070],pop:15338, state:'MA'}); | ||

| + | </syntaxhighlight> | ||

| + | |||

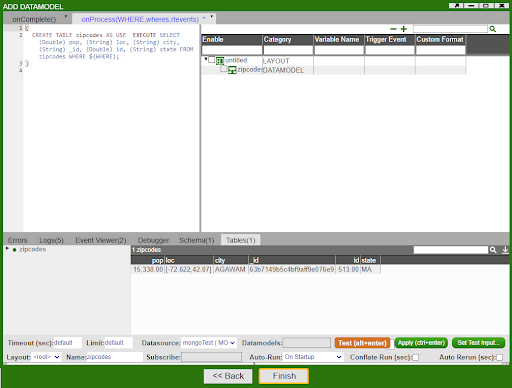

| + | <span class="nowrap"> </span> (ii).Query this table in AMI<br> | ||

| + | <syntaxhighlight lang="sql" >CREATE TABLE zips AS USE EXECUTE SELECT (String)_id,(String)city,(String)loc,(Integer)pop,(String)state FROM zipcodes WHERE ${WHERE};</syntaxhighlight> | ||

| + | |||

| + | [[File:Mongo Adapter5.png]]<br> | ||

| + | |||

| + | (b).Create a AMI SQL table from a MongoDB collection with nested columns <br> | ||

| + | <span class="nowrap"> </span> (i). Inside the MongoDB shell, we can create a collection named "myAccounts" and insert one row into the collection.<br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | db.createCollection("myAccounts"); | ||

| + | db.myAccounts.insert({id:1,name:'John Doe', address:{city:'New York City', state:'New York'}});</syntaxhighlight> | ||

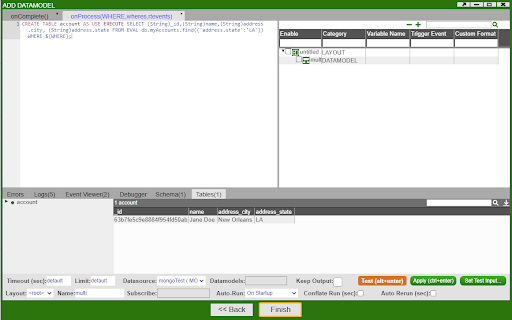

| + | <span class="nowrap"> </span> (ii).Query this table in AMI <br> | ||

| + | <syntaxhighlight lang="sql"> CREATE TABLE account AS USE EXECUTE SELECT (String)_id,(String)name,(String)address.city, (String)address.state FROM myAccounts WHERE ${WHERE};</syntaxhighlight> | ||

| + | |||

| + | (c). Create a AMI SQL table from a MongoDB collection using EVAL methods <br> | ||

| + | <span class="nowrap"> </span> (i). '''Find''' <br> | ||

| + | |||

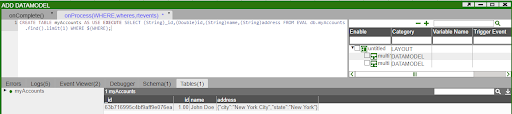

| + | Let’s use the myAccounts MongoDB collection that we created before and insert some rows into it. Inside MongoDB shell:<br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | db.myAccounts.insert({id:1,name:'John Doe', address:{city:'New York City', state:'NY'}}); | ||

| + | db.myAccounts.insert({id:2,name:'Jane Doe', address:{city:'New Orleans', state:'LA'}}); | ||

| + | </syntaxhighlight> | ||

| + | |||

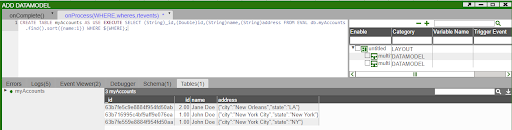

| + | If we want to create a sql table from MongoDB that finds all rows whose address state is '''LA''', we can enter the following command in AMI script and hits '''test''':<br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE account AS USE EXECUTE SELECT (String)_id,(String)name,(String)address.city, (String)address.state FROM EVAL db.myAccounts.find({'address.state':'LA'}) WHERE ${WHERE}; | ||

| + | </syntaxhighlight> | ||

| + | [[File:Mongo Adapter find.png]] <br> | ||

| + | |||

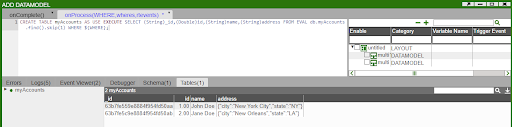

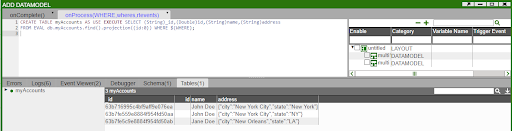

| + | <span class="nowrap"> </span> (ii). '''Limit''' <br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE myAccounts AS USE EXECUTE SELECT (String)_id,(Double)id,(String)name,(String)address FROM EVAL db.myAccounts.find().limit(1) WHERE ${WHERE}; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | [[File:Mongo Adapter limit.png]] | ||

| + | |||

| + | <span class="nowrap"> </span> (iii). '''Skip''' <br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE myAccounts AS USE EXECUTE SELECT (String)_id,(Double)id,(String)name,(String)address FROM EVAL db.myAccounts.find().skip(1) WHERE ${WHERE}; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | [[File:Mongo Adapter skip.png]] | ||

| + | |||

| + | <span class="nowrap"> </span> (iv). '''Sort''' <br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE myAccounts AS USE EXECUTE SELECT (String)_id,(Double)id,(String)name,(String)address FROM EVAL db.myAccounts.find().sort({name:1}) WHERE ${WHERE}; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | [[File:Mongo Adapter sort.png]] | ||

| + | |||

| + | <span class="nowrap"> </span> (v). '''Projection''' <br> | ||

| + | <syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE myAccounts AS USE EXECUTE SELECT (String)_id,(Double)id,(String)name,(String)address FROM EVAL db.myAccounts.find().projection({id:0}) WHERE ${WHERE}; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | [[File:Mongo Adapter projection.png]] | ||

| + | |||

| + | = Shell Command Reader = | ||

| + | |||

| + | == Overview == | ||

| + | The AMI Shell Command Datasource Adapter is a highly configurable adapter designed to execute shell commands and capture the stdout, stderr and exitcode. There are a number of directives which can be used to control how the command is executed, including setting environment variables and supplying data to be passed to stdin. The adapter processes the output from the command. Each line (delineated by a Line feed) is considered independently for parsing. Note the EXECUTE <sql> clause supports the full AMI sql language. | ||

| + | |||

| + | Please note, that running the command will produce 3 tables: | ||

| + | |||

| + | * Stdout - Contains the contents of standard out | ||

| + | * Stderr - Contains the contents from standard err | ||

| + | * exitCode - Contains the executed code of the process | ||

| + | |||

| + | (You can limit which tables are returned using the ''_include'' directive) | ||

| + | |||

| + | Generally speaking, the parser can handle '''four''' ('''4''') different methods of parsing: | ||

| + | |||

| + | === Delimited list or ordered fields === | ||

| + | Example data and query: | ||

| + | |||

| + | ''11232''|''1000''|''123.20'' | ||

| + | |||

| + | ''12412''|''8900''|''430.90''<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" _delim="|" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | |||

| + | |||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Key value pairs === | ||

| + | Example data and query: | ||

| + | |||

| + | account=''11232''|quantity=''1000''|price=''123.20'' | ||

| + | |||

| + | account=''12412''|quantity=''8900''|price=''430.90''<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" _delim="|" _equals="=" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Pattern Capture === | ||

| + | Example data and query: | ||

| + | |||

| + | Account ''11232'' has ''1000'' shares at $''123.20'' px | ||

| + | |||

| + | Account ''12412'' has ''8900'' shares at $''430.90'' px<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | _pattern="account,qty,px=Account (.*) has (.*) shares at \\$(.*) px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Raw Line === | ||

| + | If you do not specify a _''fields'', _''mapping'' nor _''pattern'' directive then the parser defaults to a simple row-per-line parser. A "line" column is generated containing the entire contents of each line from the command's output<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | == Configuring the Adapter for first use == | ||

| + | 1. Open the '''datamodeler''' (In Developer Mode -> Menu Bar -> Dashboard -> Datamodel) | ||

| + | |||

| + | 2. Choose the "'''Add Datasource'''" button | ||

| + | |||

| + | 3. Choose '''Shell Command Reader''' | ||

| + | |||

| + | 4. In the Add datasource dialog: | ||

| + | |||

| + | '''Name''': Supply a user defined Name, ex: ''MyShell'' | ||

| + | |||

| + | '''URL''': /full/path/to/path/of/working/directory (ex: ''/home/myuser/files'' ) | ||

| + | |||

| + | (Keep in mind that the path is on the machine running AMI, not necessarily your local desktop) | ||

| + | |||

| + | 5. Click "Add Datasource" Button | ||

| + | |||

| + | '''Running Commands Remotely''': You can execute commands on remote machines as well using an ''AMI Relay''. First install an AMI relay on the machine that the command should be executed on ( See ''AMI for the Enterprise'' documentation for details on how to install an AMI relay). Then in the Add Datasource wizard select the relay in the "Relay To Run On" dropdown. | ||

| + | |||

| + | == General Directives == | ||

| + | |||

| + | === Command Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="''command to run"''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the command to execute. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="ls -lrt"</span> (execute ls -lrt) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="ls | sort"</span> (execute ls and pipe that into sort) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="dir /s"</span> (execute dir on a windows system) | ||

| + | |||

| + | === Supplying Standard Input (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_stdin="''text to pipe into stdin"''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive is used to control what data is piped into the standard in (stdin) of the process to run. | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="cat > out.txt" _stdin="hello world"</span> (will pipe "hello world" into out.txt) | ||

| + | |||

| + | === Controlling what is captured from the Process (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_capture="comma_delimited_list"</span> (''default is stdout,stderr,exitCode'') | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive is used to control what output data from running the command is captured. It is a comma delimited list and the order determines what order the tables are returned in. An empty list ("") indicates that nothing will be captured (the command is executed, and all output is ignored). Options include the following: | ||

| + | |||

| + | * <span style="font-family: Courier New; color: blue;">stdout</span> - Capture standard out from the process | ||

| + | * <span style="font-family: Courier New; color: blue;">stderr</span> - Capture standard error from the process | ||

| + | * <span style="font-family: Courier New; color: blue;">exitCode</span> - Capture the exit code from the process | ||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_capture="exitCode,stdout"</span> (the 1<sup>st</sup> table will contain the exit code, 2<sup>nd</sup> will contain stdout) | ||

| + | |||

| + | === Field Definitions Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col_name, col2_type col2_name, ...''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the Column names that will be returned, along with their types. The order in which they are defined is the same as the order in which they are returned. If the column type is not supplied, the default is String. Special note on additional columns: If the line number (see _''linenum'' directive) column is not supplied in the list, it will default to type integer and be added to the end of the table schema. Columns defined in the Pattern (see _''pattern'' directive) but not defined in _''fields'' will be added to the end of the table schema. | ||

| + | |||

| + | Types should be one of: <span style="font-family: Courier New; color: blue;">''String, Long, Integer, Boolean, Double, Float, UTC''</span> | ||

| + | |||

| + | Column names must be valid variable names. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="String account,Long quantity"</span> (define two columns) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields ="fname,lname,int age"</span> (define 3 columns, ''fname'' and ''lname'' default to String) | ||

| + | |||

| + | === Environment Directive (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_env="''key=value,key=value,...''"</span> (Optional. Default is false) | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls what environment variables are set when running a command | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_env="name=Rob,Location=NY"</span> | ||

| + | |||

| + | === Use Host Environment Directive (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_useHostEnv=true|false</span> (Optional. Default is false) | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | If true, then the environment properties of the Ami process executing the command are passed to the shell. Please note, that _''env'' values can be used to override specific environment variables. | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_useHostEnv="true"</span> | ||

| + | |||

| + | == Directives for parsing Delimited list of ordered Fields == | ||

| + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) | ||

| + | |||

| + | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _e''scape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''11232-33|Smith|20'' | ||

| + | |||

| + | ''<nowiki/>'1332|ABC'||30'' | ||

| + | |||

| + | ''<nowiki/>'' | ||

| + | |||

| + | ''Account\|112|Jones|18'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !code | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |11232-33 | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |<nowiki>1332|ABC</nowiki> | ||

| + | | | ||

| + | |30 | ||

| + | |- | ||

| + | |<nowiki>Account|112</nowiki> | ||

| + | |Jones | ||

| + | |18 | ||

| + | |} | ||

| + | |||

| + | == Directives for parsing Key Value Pairs == | ||

| + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_equals=single_equals_char''</span> ('''Required''') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_mappings=from1=to1,from2=to2,...</span> (Optional) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) | ||

| + | |||

| + | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _equals char is used to indicate the key/value separator. The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _''escape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| + | |||

| + | The optional _m''appings'' directive allows you to map keys within the output to field names specified in the _''fields'' directive. This is useful when the output has key names that are not valid field names, or the output has multiple key names that should be used to populate the same column. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_equals="="</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_mappings="20=code,21=lname,22=age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''code=11232-33|lname=Smith|age=20'' | ||

| + | |||

| + | ''code='1332|ABC'|age=30'' | ||

| + | |||

| + | ''20=Act\|112|21=J|22=18 (Note: this row will work due to the _mappings directive)'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !code | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |11232-33 | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |<nowiki>1332|ABC</nowiki> | ||

| + | | | ||

| + | |30 | ||

| + | |- | ||

| + | |<nowiki>Act|112</nowiki> | ||

| + | |J | ||

| + | |18 | ||

| + | |} | ||

| + | |||

| + | == Directives for Pattern Capture == | ||

| + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> (Optional, see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_''pattern''=''col1_type col1_name, ...=regex_with_grouping''</span> ('''Required''') | ||

| + | |||

| + | The ''_pattern'' must start with a list of column names, followed by an equal sign (=) and then a regular expression with grouping (this is dubbed a column-to-pattern mapping). The regular expression's first grouping value will be mapped to the first column, 2<sup>nd</sup> grouping to the second and so on. | ||

| + | |||

| + | If a column is already defined in the _fields directive, then it's preferred to not include the column type in the ''_pattern'' definition. | ||

| + | |||

| + | For multiple column-to-pattern mappings, use the \n (new line) to separate each one. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New;color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''User John Smith is 20 years old'' | ||

| + | |||

| + | ''User Bobby Boy is 30 years old'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !fname | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |John | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |Bobby | ||

| + | |Boy | ||

| + | |30 | ||

| + | |} | ||

| + | <span style="font-family: Courier New; color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old\n lname,fname,int weight=Customer (.*),(.*) weighs (.*) pounds"</span> | ||

| + | |||

| + | This defines two patterns such that: | ||

| + | |||

| + | ''User John Smith is 20 years old'' | ||

| + | |||

| + | ''User Boy,Bobby weighs 130 pounds''' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !fname | ||

| + | !lname | ||

| + | !age | ||

| + | !weight | ||

| + | |- | ||

| + | |John | ||

| + | |Smith | ||

| + | |20 | ||

| + | | | ||

| + | |- | ||

| + | |Bobby | ||

| + | |Boy | ||

| + | | | ||

| + | |130 | ||

| + | |} | ||

| + | |||

| + | == Optional Line Number Directives == | ||

| + | |||

| + | === Skipping Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines=''number_of_lines''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the number of lines to skip from the top of the output. This is useful for ignoring a head at the top of output. If not supplied, then no lines are skipped. From a performance standpoint, skipping lines is highly efficient. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="0"</span> (this is the default, don't skip any lines) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="1"</span> (skip the first line, for example if there is a header) | ||

| + | |||

| + | === Line Number Column Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=''column_name''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the name of the column that will contain the line number. If not supplied, the default is "linenum". Notes about the line number: The first line is line number 1, and skipped/filtered out lines are still considered in numbering. For example, if the _''skipLines''=2 , then the first line will have a line number of 3. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=""</span> (A line number column is not included in the table) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="linenum"</span> (The column ''linenum'' will contain line numbers, this is the default) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="rownum"</span> (The column ''rownum'' will contain line numbers) | ||

| + | |||

| + | == Optional Line Filtering Directives == | ||

| + | |||

| + | === Filtering Out Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Any line that matches the supplied regular expression will be ignored. If not supplied, then no lines are filtered out. From a Performance standpoint, this is applied before other parsing is considered, so ignoring lines using a filter out directive is faster, as opposed to using a WHERE clause, for example. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="Test"</span> (ignore any lines containing the text ''Test'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="^Comment"</span> (ignore any lines starting with ''Comment'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="This|That"</span> (ignore any lines containing the text ''This'' or ''That'') | ||

| + | |||

| + | === Filtering In Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Only lines that match the supplied regular expression will be considered. If not supplied, then all lines are considered. From a Performance standpoint, this is applied before other parsing is considered, so narrowing down the lines considered using a filter in directive is faster, as opposed to using a WHERE clause, for example. If you use a grouping (..) inside the regular expression, then only the contents of the first grouping will be considered for parsing | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="3Forge"</span> (ignore any lines that don't contain the word ''3Forge'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="^Outgoing"</span> (ignore any lines that don't start with ''Outgoing'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="Data(.*)"</span> (ignore any lines that don't start with ''Data,'' and only consider the text after the word ''Data'' for processing) | ||

| + | |||

| + | = SSH Adapter = | ||

| + | |||

| + | == Overview == | ||

| + | The AMI SSH Datasource Adapter is a highly configurable adapter designed to execute shell commands and capture the stdout, stderr and exitcode on remote hosts via the secure ssh protocol. There are a number of directives which can be used to control how the command is executed, including supplying data to be passed to stdin. The adapter processes the output from the command. Each line (delineated by a Line feed) is considered independently for parsing. Note the EXECUTE <sql> clause supports the full AMI sql language. | ||

| + | |||

| + | Please note, that running the command will produce 3 tables: | ||

| + | |||

| + | * Stdout - Contains the contents of standard out | ||

| + | * Stderr - Contains the contents from standard err | ||

| + | * exitCode - Contains the executed code of the process | ||

| + | |||

| + | (You can limit which tables are returned using the ''_include'' directive) | ||

| + | |||

| + | Generally speaking, the parser can handle '''four''' ('''4''') different methods of parsing: | ||

| + | |||

| + | === Delimited list or ordered fields === | ||

| + | Example data and query: | ||

| + | |||

| + | ''11232''|''1000''|''123.20'' | ||

| + | |||

| + | ''12412''|''8900''|''430.90''<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" _delim="|" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | |||

| + | |||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Key value pairs === | ||

| + | Example data and query: | ||

| + | |||

| + | account=''11232''|quantity=''1000''|price=''123.20'' | ||

| + | |||

| + | account=''12412''|quantity=''8900''|price=''430.90''<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" _delim="|" _equals="=" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Pattern Capture === | ||

| + | Example data and query: | ||

| + | |||

| + | Account ''11232'' has ''1000'' shares at $''123.20'' px | ||

| + | |||

| + | Account ''12412'' has ''8900'' shares at $''430.90'' px<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" | ||

| + | _fields="String account, Integer qty, Double px" | ||

| + | _pattern="account,qty,px=Account (.*) has (.*) shares at \\$(.*) px" | ||

| + | EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | === Raw Line === | ||

| + | If you do not specify a _''fields'', _''mapping'' nor _''pattern'' directive then the parser defaults to a simple row-per-line parser. A "line" column is generated containing the entire contents of each line from the command's output<syntaxhighlight lang="sql"> | ||

| + | CREATE TABLE mytable AS USE _cmd="my_cmd" EXECUTE SELECT * FROM cmd | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | == Configuring the Adapter for first use == | ||

| + | 1. Open the '''datamodeler''' (In Developer Mode -> Menu Bar -> Dashboard -> Datamodel) | ||

| + | |||

| + | 2. Choose the "'''Add Datasource'''" button | ||

| + | |||

| + | 3. Choose '''SSH Command''' adapter | ||

| + | |||

| + | 4. In the Add datasource dialog: | ||

| + | |||

| + | '''Name''': Supply a user defined Name, ex: ''MyShell'' | ||

| + | |||

| + | '''URL''': ''hostname'' or ''hostname:port'' | ||

| + | |||

| + | '''Username''': the name of the ssh user to login as | ||

| + | |||

| + | '''Password''': the password of the ssh user to login as | ||

| + | |||

| + | Options: See below, note when using multiple options they should be comma delimited | ||

| + | |||

| + | * For servers requiring keyboard interactive authentication: ''authMode=keyboardInteractive'' | ||

| + | * To use a public/private key for authentication: ''publicKeyFile=/path/to/key/file'' ''(Note this is often /your_home_dir/.ssh/id_rsa)'' | ||

| + | * To request a dumb pty connection: ''useDumbPty=true'' | ||

| + | |||

| + | 5. Click "Add Datasource" Button | ||

| + | |||

| + | '''Running Commands Remotely''': You can execute commands on remote machines as well using an ''AMI Relay''. First install an AMI relay on the machine that the command should be executed on ( See ''AMI for the Enterprise'' documentation for details on how to install an AMI relay). Then in the Add Datasource wizard select the relay in the "Relay To Run On" dropdown. | ||

| + | |||

| + | == General Directives == | ||

| + | |||

| + | === Command Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="''command to run"''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the command to execute. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="ls -lrt"</span> (execute ls -lrt) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="ls | sort"</span> (execute ls and pipe that into sort) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="dir /s"</span> (execute dir on a windows system) | ||

| + | |||

| + | === Supplying Standard Input (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_stdin="''text to pipe into stdin"''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive is used to control what data is piped into the standard in (stdin) of the process to run. | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_cmd="cat > out.txt" _stdin="hello world"</span> (will pipe "hello world" into out.txt) | ||

| + | |||

| + | === Controlling what is captured from the Process (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_capture="comma_delimited_list"</span> (''default is stdout,stderr,exitCode'') | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive is used to control what output data from running the command is captured. It is a comma delimited list and the order determines what order the tables are returned in. An empty list ("") indicates that nothing will be captured (the command is executed, and all output is ignored). Options include the following: | ||

| + | |||

| + | *<span style="font-family: Courier New; color: blue;">stdout</span> - Capture standard out from the process | ||

| + | *<span style="font-family: Courier New; color: blue;">stderr</span> - Capture standard error from the process | ||

| + | *<span style="font-family: Courier New; color: blue;">exitCode</span> - Capture the exit code from the process | ||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_capture="exitCode,stdout"</span> (the 1<sup>st</sup> table will contain the exit code, 2<sup>nd</sup> will contain stdout) | ||

| + | |||

| + | === Field Definitions Directive (Required) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col_name, col2_type col2_name, ...''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the Column names that will be returned, along with their types. The order in which they are defined is the same as the order in which they are returned. If the column type is not supplied, the default is String. Special note on additional columns: If the line number (see _''linenum'' directive) column is not supplied in the list, it will default to type integer and be added to the end of the table schema. Columns defined in the Pattern (see _''pattern'' directive) but not defined in _''fields'' will be added to the end of the table schema. | ||

| + | |||

| + | Types should be one of: <span style="font-family: Courier New; color: blue;">''String, Long, Integer, Boolean, Double, Float, UTC''</span> | ||

| + | |||

| + | Column names must be valid variable names. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="String account,Long quantity"</span> (define two columns) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields ="fname,lname,int age"</span> (define 3 columns, ''fname'' and ''lname'' default to String) | ||

| + | |||

| + | === Environment Directive (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_env="''key=value,key=value,...''"</span> (Optional. Default is false) | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls what environment variables are set when running a command | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_env="name=Rob,Location=NY"</span> | ||

| + | |||

| + | === Use Host Environment Directive (Optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_useHostEnv=true|false</span> (Optional. Default is false) | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | If true, then the environment properties of the Ami process executing the command are passed to the shell. Please note, that _''env'' values can be used to override specific environment variables. | ||

| + | |||

| + | '''Example''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_useHostEnv="true"</span> | ||

| + | |||

| + | == Directives for parsing Delimited list of ordered Fields == | ||

| + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) | ||

| + | |||

| + | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _e''scape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> | ||

| + | |||

| + | This defines a pattern such that: | ||

| + | |||

| + | ''11232-33|Smith|20'' | ||

| + | |||

| + | ''<nowiki/>'1332|ABC'||30'' | ||

| + | |||

| + | ''<nowiki/>'' | ||

| + | |||

| + | ''Account\|112|Jones|18'' | ||

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !code | ||

| + | !lname | ||

| + | !age | ||

| + | |- | ||

| + | |11232-33 | ||

| + | |Smith | ||

| + | |20 | ||

| + | |- | ||

| + | |<nowiki>1332|ABC</nowiki> | ||

| + | | | ||

| + | |30 | ||

| + | |- | ||

| + | |<nowiki>Account|112</nowiki> | ||

| + | |Jones | ||

| + | |18 | ||

| + | |} | ||

| + | |||

| + | == Directives for parsing Key Value Pairs == | ||

| + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | ||

| − | + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> ('''Required''', see general directives) | |

| − | + | <span style="font-family: Courier New; color: blue;">_delim=delim_string</span> ('''Required''') | |

| − | + | <span style="font-family: Courier New; color: blue;">_conflateDelim=true|false</span> (Optional. Default is false) | |

| − | + | <span style="font-family: Courier New; color: blue;">''_equals=single_equals_char''</span> ('''Required''') | |

| − | _escape="\\" | + | <span style="font-family: Courier New; color: blue;">_mappings=from1=to1,from2=to2,...</span> (Optional) |

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_quote=single_quote_char''</span> (Optional) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">''_escape=single_escape_char''</span> (Optional) | ||

| + | |||

| + | The _''delim'' indicates the char (or chars) used to separate each field (If _''conflateDelim'' is true, then 1 or more consecutive delimiters are treated as a single delimiter). The _equals char is used to indicate the key/value separator. The _''fields'' is an ordered list of types and field names for each of the delimited fields. If the _''quote'' is supplied, then a field value starting with ''quote'' will be read until another ''quote'' char is found, meaning ''delim''s within ''quotes'' will not be treated as delims. If the _''escape'' char is supplied then when an ''escape'' char is read, it is skipped and the following char is read as a literal. | ||

| + | |||

| + | The optional _m''appings'' directive allows you to map keys within the output to field names specified in the _''fields'' directive. This is useful when the output has key names that are not valid field names, or the output has multiple key names that should be used to populate the same column. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_delim="|"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_equals="="</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_fields="code,lname,int age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_mappings="20=code,21=lname,22=age"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_quote="'"</span> | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_escape="\\"</span> | ||

This defines a pattern such that: | This defines a pattern such that: | ||

| Line 211: | Line 1,159: | ||

== Directives for Pattern Capture == | == Directives for Pattern Capture == | ||

| − | + | <span style="font-family: Courier New; color: blue;">_cmd=''command_to_execute''</span> ('''Required''', see general directives) | |

| − | _fields=''col1_type col1_name, ...'' (Optional, see general directives) | + | <span style="font-family: Courier New; color: blue;">_fields=''col1_type col1_name, ...''</span> (Optional, see general directives) |

| − | _''pattern''=''col1_type col1_name, ...=regex_with_grouping'' ('''Required''') | + | <span style="font-family: Courier New; color: blue;">_''pattern''=''col1_type col1_name, ...=regex_with_grouping''</span> ('''Required''') |

The ''_pattern'' must start with a list of column names, followed by an equal sign (=) and then a regular expression with grouping (this is dubbed a column-to-pattern mapping). The regular expression's first grouping value will be mapped to the first column, 2<sup>nd</sup> grouping to the second and so on. | The ''_pattern'' must start with a list of column names, followed by an equal sign (=) and then a regular expression with grouping (this is dubbed a column-to-pattern mapping). The regular expression's first grouping value will be mapped to the first column, 2<sup>nd</sup> grouping to the second and so on. | ||

| Line 223: | Line 1,171: | ||

For multiple column-to-pattern mappings, use the \n (new line) to separate each one. | For multiple column-to-pattern mappings, use the \n (new line) to separate each one. | ||

| − | + | '''Examples''' | |

| − | _pattern="fname,lname,int age=User (.*) (.*) is (.*) years old" | + | |

| + | <span style="font-family: Courier New;color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old"</span> | ||

This defines a pattern such that: | This defines a pattern such that: | ||

| Line 246: | Line 1,195: | ||

|30 | |30 | ||

|} | |} | ||

| − | <span style="font-family: Courier New; color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old\n lname,fname,int weight=Customer (.*),(.*) weighs (.*) pounds</span> | + | <span style="font-family: Courier New; color: blue;">_pattern="fname,lname,int age=User (.*) (.*) is (.*) years old\n lname,fname,int weight=Customer (.*),(.*) weighs (.*) pounds"</span> |

This defines two patterns such that: | This defines two patterns such that: | ||

| Line 252: | Line 1,201: | ||

''User John Smith is 20 years old'' | ''User John Smith is 20 years old'' | ||

| − | ''User Boy,Bobby weighs 130 pounds'' | + | ''User Boy,Bobby weighs 130 pounds''' |

| + | |||

| + | Maps to: | ||

| + | {| class="wikitable" | ||

| + | !fname | ||

| + | !lname | ||

| + | !age | ||

| + | !weight | ||

| + | |- | ||

| + | |John | ||

| + | |Smith | ||

| + | |20 | ||

| + | | | ||

| + | |- | ||

| + | |Bobby | ||

| + | |Boy | ||

| + | | | ||

| + | |130 | ||

| + | |} | ||

| + | |||

| + | == Optional Line Number Directives == | ||

| + | |||

| + | === Skipping Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines=''number_of_lines''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the number of lines to skip from the top of the output. This is useful for ignoring a head at the top of output. If not supplied, then no lines are skipped. From a performance standpoint, skipping lines is highly efficient. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="0"</span> (this is the default, don't skip any lines) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_skipLines="1"</span> (skip the first line, for example if there is a header) | ||

| + | |||

| + | === Line Number Column Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=''column_name''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | This directive controls the name of the column that will contain the line number. If not supplied, the default is "linenum". Notes about the line number: The first line is line number 1, and skipped/filtered out lines are still considered in numbering. For example, if the _''skipLines''=2 , then the first line will have a line number of 3. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum=""</span> (A line number column is not included in the table) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="linenum"</span> (The column ''linenum'' will contain line numbers, this is the default) | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_linenum="rownum"</span> (The column ''rownum'' will contain line numbers) | ||

| + | |||

| + | == Optional Line Filtering Directives == | ||

| + | |||

| + | === Filtering Out Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Any line that matches the supplied regular expression will be ignored. If not supplied, then no lines are filtered out. From a Performance standpoint, this is applied before other parsing is considered, so ignoring lines using a filter out directive is faster, as opposed to using a WHERE clause, for example. | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="Test"</span> (ignore any lines containing the text ''Test'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="^Comment"</span> (ignore any lines starting with ''Comment'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterOut="This|That"</span> (ignore any lines containing the text ''This'' or ''That'') | ||

| + | |||

| + | === Filtering In Lines Directive (optional) === | ||

| + | '''Syntax''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn=''regex''</span> | ||

| + | |||

| + | '''Overview''' | ||

| + | |||

| + | Only lines that match the supplied regular expression will be considered. If not supplied, then all lines are considered. From a Performance standpoint, this is applied before other parsing is considered, so narrowing down the lines considered using a filter in directive is faster, as opposed to using a WHERE clause, for example. If you use a grouping (..) inside the regular expression, then only the contents of the first grouping will be considered for parsing | ||

| + | |||

| + | '''Examples''' | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="3Forge"</span> (ignore any lines that don't contain the word ''3Forge'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="^Outgoing"</span> (ignore any lines that don't start with ''Outgoing'') | ||

| + | |||

| + | <span style="font-family: Courier New; color: blue;">_filterIn="Data(.*)"</span> (ignore any lines that don't start with ''Data,'' and only consider the text after the word ''Data'' for processing) | ||

| + | |||

| + | [[File:1.5.png|thumb]] | ||

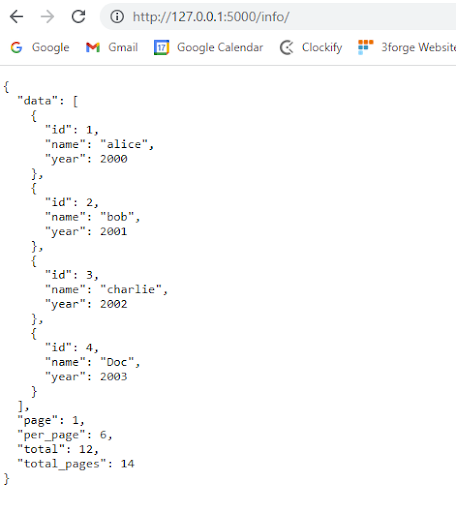

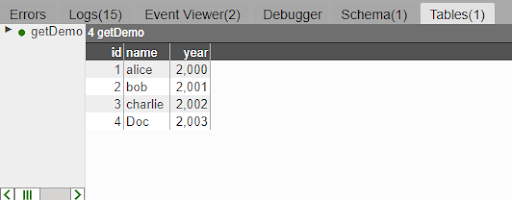

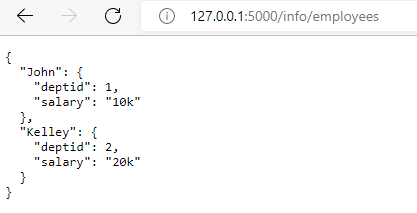

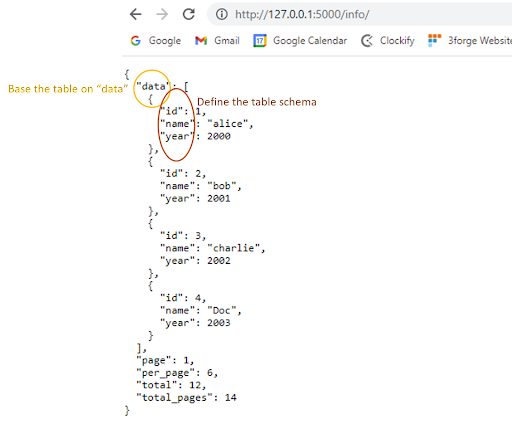

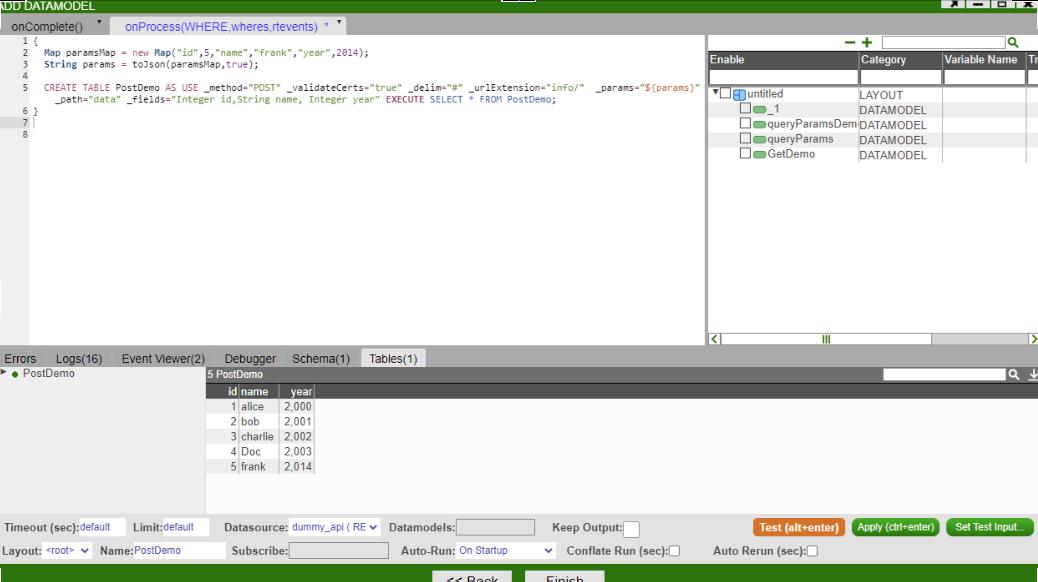

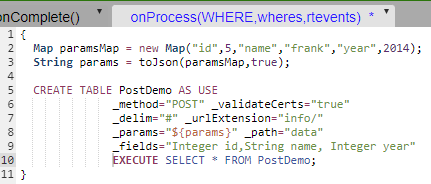

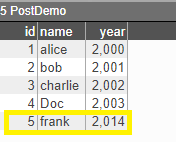

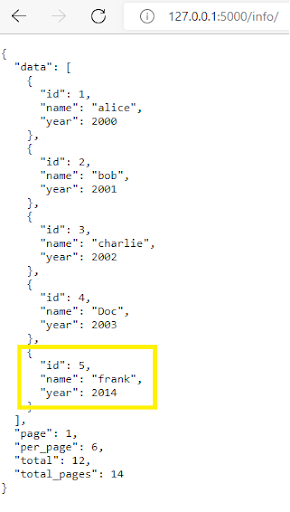

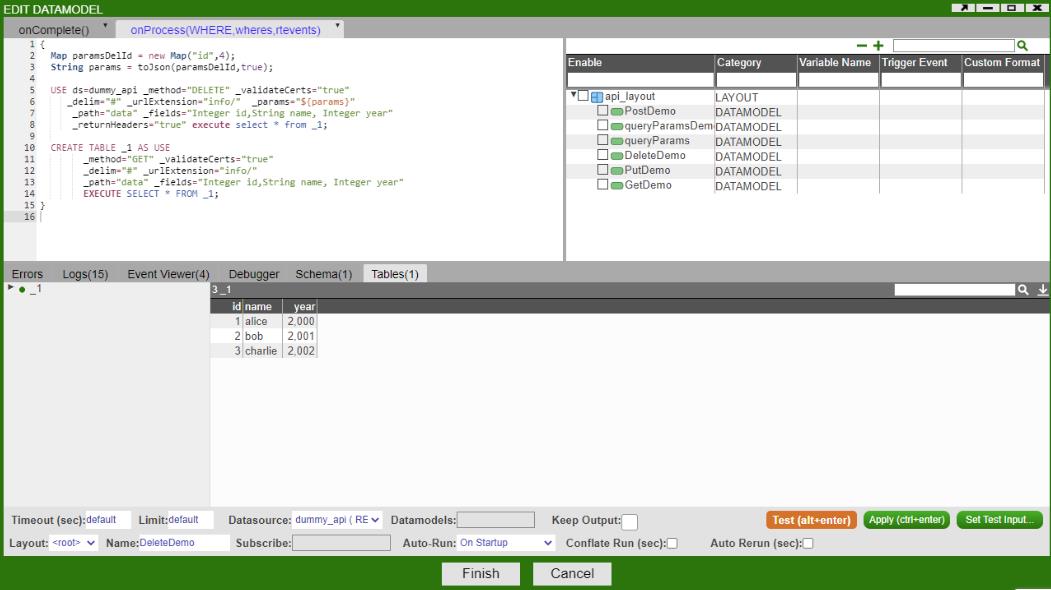

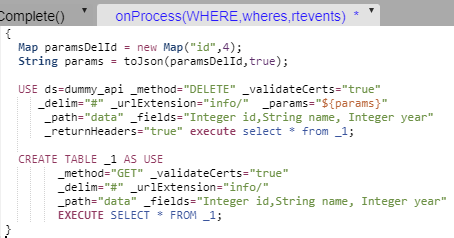

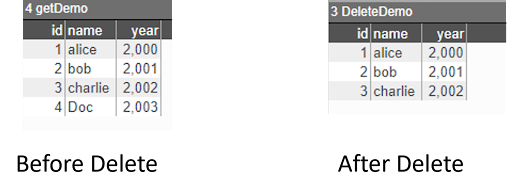

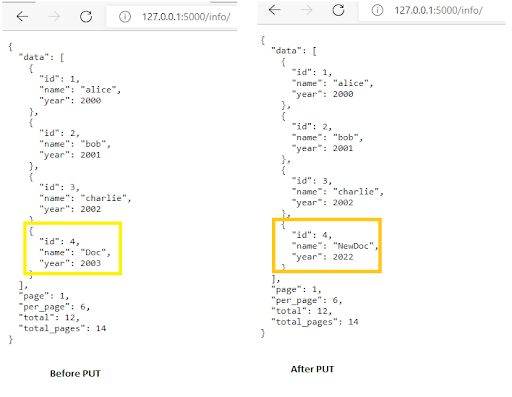

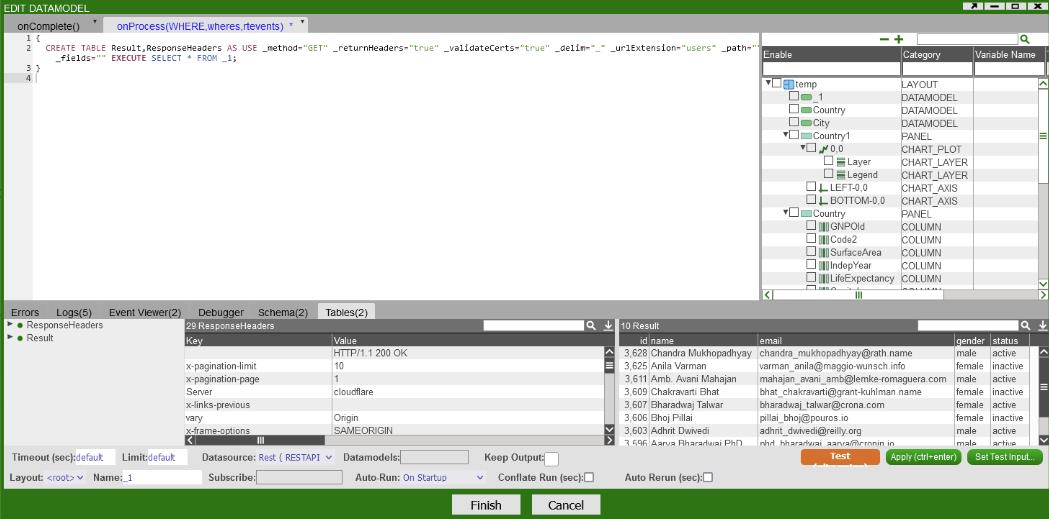

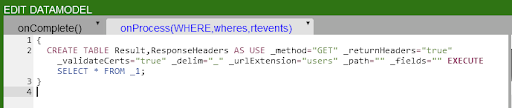

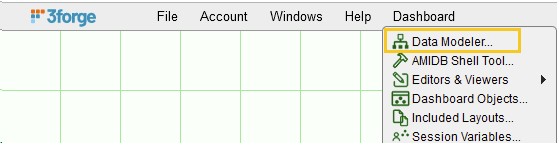

| + | = REST Adapter = | ||

| + | == Overview == | ||

| + | The AMI REST adaptor aims to establish a bridge between the AMI and the RESTful API so that we can interact with RESTful API from within AMI. Here are some basic instructions on how to add a REST Api Adapter and use it. | ||

| + | |||

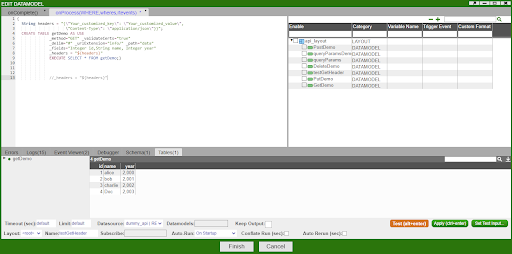

| + | == Setting up your first Rest API Datasource == | ||

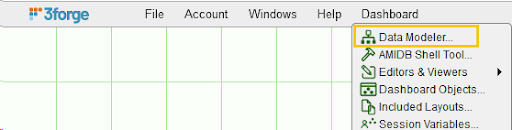

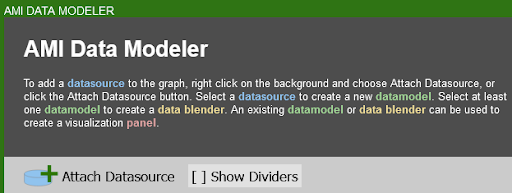

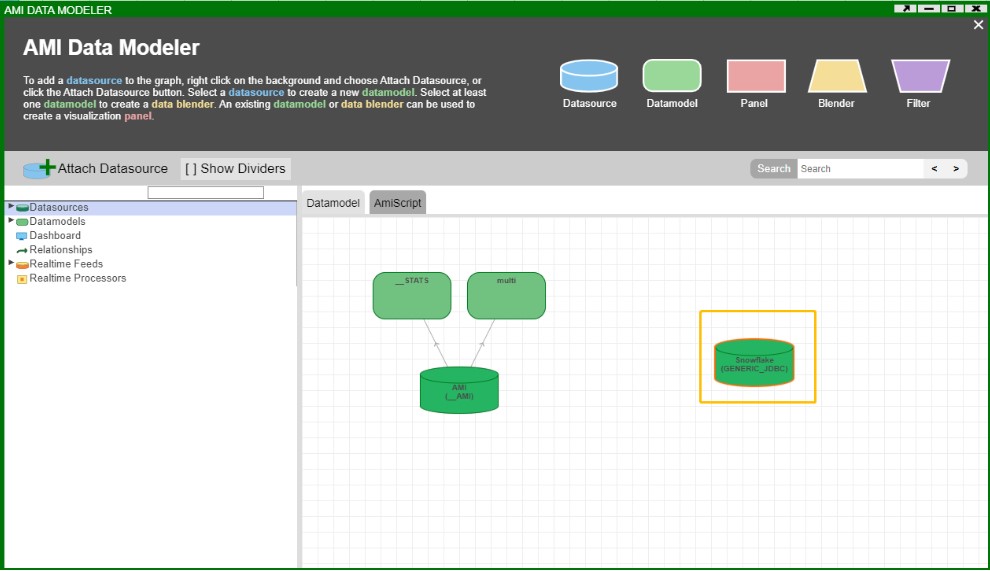

| + | 1. Go to '''Dashboard''' -> '''Datamodeler''' | ||

| + | |||

| + | [[File:1.1.png|650px]] | ||

| + | |||

| + | 2. Click '''Attach Datasource''' | ||

| + | |||

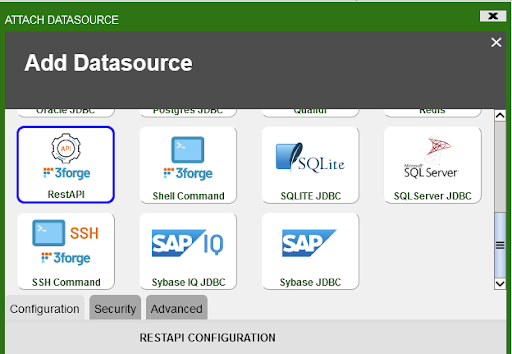

| + | [[File:1.2.png|650px]] | ||

| + | |||

| + | 3. Select the '''RestAPI''' Datasource Adapter | ||

| + | |||

| + | [[File:1.3.png|650px]] | ||

| + | |||

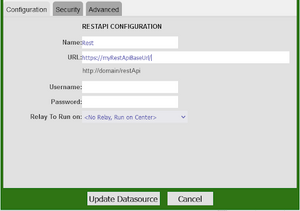

| + | 4. Fill in the fields: | ||

| + | |||

| + | <code> | ||

| + | <strong>Name</strong>: The name of the Datasource <br> | ||

| + | <strong>URL</strong>: The base url of the target api. (Alternatively you can use the direct url to the rest api, see the directive _urlExtensions for more information) <br> | ||

| + | <strong>Username</strong>: (Username for basic authentication)<br> | ||

| + | <strong>Password</strong>: (Password for basic authentication)<br> | ||

| + | </code> | ||

| + | |||

| + | |||

| + | |||

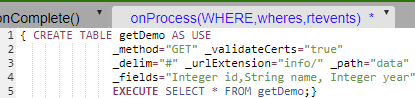

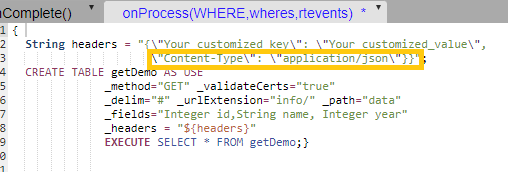

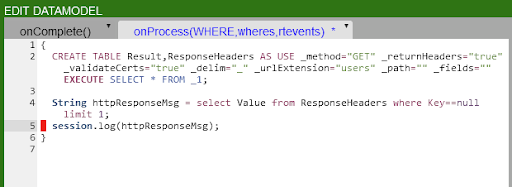

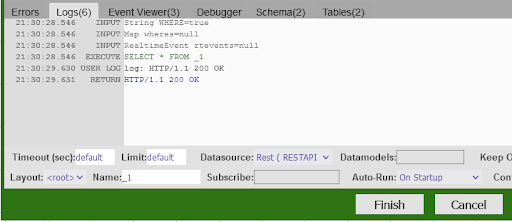

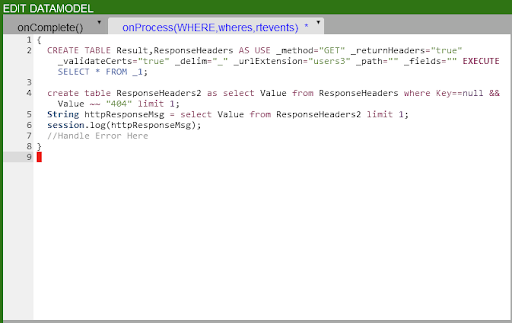

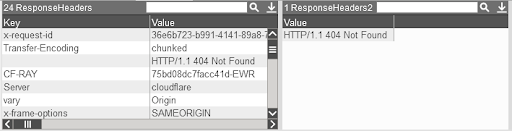

| + | == How to use the 3forge RestAPI Adapter == | ||

| + | |||

| + | === The available directives you can use are === | ||

| + | |||

| + | |||

| + | *'''_method''' = GET, POST, PUT, DELETE | ||

| + | *'''_validateCerts''' = (true or false) indicates whether or not to validate the certificate by default this is set to validate. | ||

| + | *'''_path''' = The path to the object that you want to treat as table (by default, the value is empty-string which correlates to the response json) | ||

| + | *'''_delim''' = This is the delimiter used to grab nested values | ||

| + | *'''_urlExtensions''' = Any string that you want to add to the end of the Datasource URL | ||

| + | *'''_fields''' = Type name comma delimited list of fields that you desire. If none is provided, AMI guesses the types and fields | ||

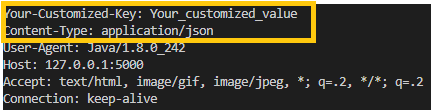

| + | *'''_headers or _header_xxxx''': any headers you would like to send in the REST request, (If you use both, you will have the headers from both types of directives) | ||

| + | *'''_headers''' expects a valid json string | ||

| + | *'''_header_xxxx''' is used to do key-value pairs | ||

| + | *'''_params or _param_xxxx''' is used to provide params to the REST request. (If you provide both, they will be joined) | ||

| + | *'''_params''' will send the values as is | ||

| + | *'''_param_xxxx''' is a way to provide key-value params that are joined with the delimiter & and the associator | ||

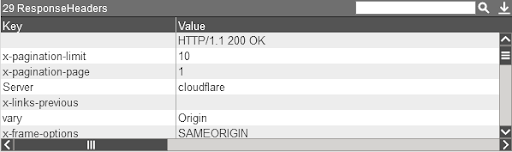

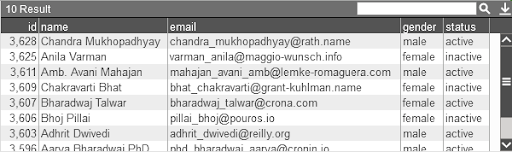

| + | *'''_returnHeaders''' = (true or false) this enables AMI to return the response headers in a table, set to true this will return two tables, the result and the response headers. See the section on ReturnHeaders Directive for more details and examples. | ||

| + | |||

| + | |||